If you've ever wanted to download files from many different annal.org items in an automated way, here is one method to do it.

____________________________________________________________

Here'southward an overview of what we'll exercise:

1. Confirm or install a terminal emulator and wget

ii. Create a listing of archive.org item identifiers

3. Craft a wget control to download files from those identifiers

4. Run the wget command.

____________________________________________________________

Requirements

Required: a terminal emulator and wget installed on your computer. Below are instructions to make up one's mind if you already have these.

Recommended but not required: agreement of basic unix commands and archive.org items structure and terminology.

____________________________________________________________

Section 1. Determine if you take a terminal emulator and wget.

If not, they need to be installed (they're free)

1. Check to see if you already have wget installed

If y'all already have a terminal emulator such as Terminal (Mac) or Cygwin (Windows) you can check if y'all have wget likewise installed. If you do not have them both installed go to Department 2. Here's how to check to see if you have wget using your terminal emulator:

one. Open Terminal (Mac) or Cygwin (Windows)

2. Type "which wget" after the $ sign

3. If y'all have wget the result should show what directory information technology'south in such as /usr/bin/wget. If you don't accept it at that place will be no results.

2. To install a terminal emulator and/or wget:

Windows: To install a terminal emulator along with wget please read Installing Cygwin Tutorial. Exist sure to choose the wget module option when prompted.

MacOSX: MacOSX comes with Terminal installed. Y'all should observe it in the Utilities binder (Applications > Utilities > Terminal). For wget, there are no official binaries of wget available for Mac OS 10. Instead, you must either build wget from source code or download an unofficial binary created elsewhere. The following links may be helpful for getting a working re-create of wget on Mac OSX.

Prebuilt binary for Mac OSX Lion and Snow Leopard

wget for Mac OSX leopard

Edifice from source for MacOSX: Skip this step if you lot are able to install from the above links.

To build from source, you must first Install Xcode. Once Xcode is installed there are many tutorials online to guide you through edifice wget from source. Such as, How to install wget on your Mac.

____________________________________________________________

Section two. Now you tin utilize wget to download lots of files

The method for using wget to download files is:

- Generate a list of archive.org detail identifiers (the tail stop of the url for an annal.org particular page) from which y'all wish to grab files.

- Create a binder (a directory) to hold the downloaded files

- Construct your wget control to retrieve the desired files

- Run the command and wait for information technology to finish

Step 1: Create a folder (directory) for your downloaded files

ane. Create a folder named "Files" on your computer Desktop. This is where the downloaded where files volition go. Create it the usual style by using either control-shift-n (Mac) or control-shift-n (Windows)

Step two: Create a file with the list of identifiers

You'll need a text file with the listing of archive.org item identifiers from which you want to download files. This file volition be used by the wget to download the files.

If you already take a list of identifiers y'all can paste or type the identifiers into a file. There should be one identifier per line. The other option is to employ the archive.org search engine to create a list based on a query. To exercise this we will use advanced search to create the listing and then download the list in a file.

First, determine your search query using the search engine. In this example, I am looking for items in the Prelinger collection with the subject "Wellness and Hygiene." There are currently 41 items that lucifer this query. One time yous've figured out your query:

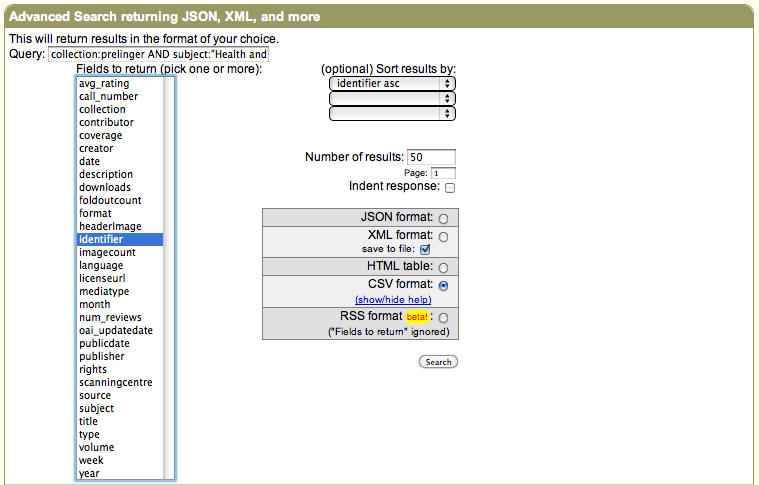

i. Get to the advanced search folio on archive.org. Use the "Advanced Search returning JSON, XML, and more." section to create a query. In one case yous have a query that delivers the results you want click the dorsum button to become back to the advanced search page.

three. Select "identifier" from the "Fields to return" list.

4. Optionally sort the results (sorting by "identifier asc" is handy for arranging them in alphabetical order.)

five. Enter the number of results from footstep 1 into the "Number of results" box that matches (or is higher than) the number of results your query returns.

6. Choose the "CSV format" radio button.

This image shows what the accelerate query would expect similar for our example:

7. Click the search button (may have a while depending on how many results y'all accept.) An warning box volition inquire if yous want your results – click "OK" to go on. You'll then see a prompt to download the "search.csv" file to your calculator. The downloaded file volition be in your default download location (often your Desktop or your Downloads binder).

8. Rename the "search.csv" file "itemlist.txt" (no quotes.)

9. Drag or move the itemlist.txt file into your "Files" folder that you previously created

10. Open the file in a text programme such as TextEdit (Mac) or Notepad (Windows). Delete the offset line of copy which reads "identifier". Exist sure you deleted the entire line and that the first line is non a blank line. Now remove all the quotes by doing a search and replace replacing the " with zero.

The contents of the itemlist.txt file should now look like this:

AboutFac1941 Attitude1949 BodyCare1948 Cancer_2 Careofth1949 Careofth1951 CityWate1941

…………………………………………………………………………………………………………………………

Annotation: You can use this avant-garde search method to create lists of thousands of identifiers, although we don't recommend using it to retrieve more than 10,000 or and so items at once (it volition time out at a sure signal).

………………………………………………………………………………………………………………………...

Stride 3: Create a wget command

The wget command uses unix terminology. Each symbol, alphabetic character or discussion represents different options that the wget will execute.

Below are 3 typical wget commands for downloading from the identifiers listed in your itemlist.txt file.

To get all files from your identifier list:

wget -r -H -nc -np -nH --cut-dirs=ane -due east robots=off -l1 -i ./itemlist.txt -B 'http://annal.org/download/'

If you want to only download certain file formats (in this example pdf and epub) you should include the -A option which stands for "accept". In this example we would download the pdf and jp2 files

wget -r -H -nc -np -nH --cut-dirs=1 -A .pdf,.epub -e robots=off -l1 -i ./itemlist.txt -B 'http://archive.org/download/'

To only download all files except specific formats (in this example tar and zip) you should include the -R selection which stands for "reject". In this case nosotros would download all files except tar and nada files:

wget -r -H -nc -np -nH --cut-dirs=ane-R .tar,.zip -eastward robots=off -l1 -i ./itemlist.txt -B 'http://annal.org/download/'

If yous desire to modify 1 of these or craft a new one yous may find it easier to do it in a text editing plan (TextEdit or NotePad) rather than doing it in the terminal emulator.

…………………………………………………………………………………………………………………………

NOTE: To craft a wget command for your specific needs yous might demand to understand the various options. It can get complicated so try to get a thorough understanding before experimenting.You can learn more about unix commands at Basic unix commands

An explanation of each options used in our example wget command are equally follows:

-r recursive download; required in order to move from the item identifier down into its individual files

-H enable spanning across hosts when doing recursive retrieving (the initial URL for the directory will exist on archive.org, and the individual file locations will exist on a specific datanode)

-nc no clobber; if a local copy already exists of a file, don't download it once more (useful if y'all have to restart the wget at some bespeak, as it avoids re-downloading all the files that were already done during the get-go pass)

-np no parent; ensures that the recursion doesn't climb backup the directory tree to other items (by, for instance, following the "../" link in the directory listing)

-nH no host directories; when using -r, wget volition create a directory tree to stick the local copies in, starting with the hostname ({datanode}.us.archive.org/), unless -nH is provided

--cut-dirs=one completes what -nH started by skipping the hostname; when saving files on the local disk (from a URL similarhttp://{datanode}.united states.annal.org/{drive}/items/{identifier}/{identifier}.pdf), skip the /{bulldoze}/items/ portion of the URL, also, so that all {identifier} directories appear together in the current directory, instead of being buried several levels down in multiple {bulldoze}/items/ directories

-e robots=off archive.org datanodes contain robots.txt files telling robotic crawlers not to traverse the directory structure; in society to recurse from the directory to the individual files, nosotros need to tell wget to ignore the robots.txt directive

-i ../itemlist.txt location of input file listing all the URLs to employ; "../itemlist" means the list of items should announced one level upward in the directory structure, in a file called "itemlist.txt" (you lot can call the file anything you want, and so long every bit y'all specify its actual name after -i)

-B 'http://archive.org/download/' base of operations URL; gets prepended to the text read from the -i file (this is what allows us to accept just the identifiers in the itemlist file, rather than the full URL on each line)

Additional options that may be needed sometimes:

-l depth --level=depth Specify recursion maximum depth level depth. The default maximum depth is 5. This selection is helpful when you are downloading items that contain external links or URL's in either the items metadata or other text files within the item. Hither'due south an example command to avoid downloading external links contained in an items metadata:

wget -r -H -nc -np -nH --cut-dirs=1 -l 1 -e robots=off -i ../itemlist.txt -B 'http://archive.org/download/'

-A -R take-list and reject-list, either limiting the download to certain kinds of file, or excluding certain kinds of file; for case, adding the following options to your wget command would download all files except those whose names finish with _orig_jp2.tar or _jpg.pdf:

wget -r -H -nc -np -nH --cut-dirs=1-R _orig_jp2.tar,_jpg.pdf -e robots=off -i ../itemlist.txt -B 'http://archive.org/download/'

And calculation the following options would download all files containing zelazny in their names, except those catastrophe with .ps:

wget -r -H -nc -np -nH --cutting-dirs=i -e robots=off -i ../itemlist.txt -B 'http://archive.org/download/'-A "*zelazny*" -R .ps

Seehttp://www.gnu.org/software/wget/manual/html_node/Types-of-Files.html for a fuller caption.

…………………………………………………………………………………………………………………………

Stride 4: Run the command

1. Open your final emulator (Terminal or Cygwin)

two. In your terminal emulator window, move into your folder/directory. To do this:

For Mac: type cd Desktop/Files

For Windows type in Cygwin after the $ cd /cygdrive/c/Users/archive/Desktop/Files

3. Hitting return. You have now moved into thursday due east"Files" folder.

four. In your terminal emulator enter or paste your wget command. If you are using on of the commands on this page exist sure to copy the entire control which may be on 2 lines. Yous can merely cut and paste in Mac. For Cygwin, re-create the control, click the Cygwin logo in the upper left corner, select Edit then select Paste.

5. Hit return to run the command.

You will see your progress on the screen. If yous take sorted your itemlist.txt alphabetically, y'all can gauge how far through the list you are based on the screen output. Depending on how many files you are downloading and their size, it may accept quite some time for this control to finish running.

…………………………………………………………………………………………………………………………

Notation: We strongly recommend trying this process with just ONE identifier first as a test to brand sure you lot download the files you desire before you try to download files from many items.

…………………………………………………………………………………………………………………………

Tips:

- You tin terminate the command past pressing "control" and "c" on your keyboard simultaneously while in the terminal window.

- If your command volition take a while to consummate, make sure your computer is set to never sleep and turn off automated updates.

- If you remember y'all missed some items (e.g. due to machines being down), you can merely rerun the command after it finishes. The "no clobber" selection in the command volition prevent already retrieved files from being overwritten, so but missed files will be retrieved.

DOWNLOAD HERE

Posted by: glenverbut.blogspot.com

0 Comments